HAProxy

우분투 20.04 에서 진행한다. 패키지관리자로 설치가 가능하다. SSL을 지원하는 2.x 버전이 설치된다.

apt install -y haproxyroot@haproxy1:~# haproxy --version

HA-Proxy version 2.0.29-0ubuntu1 2022/08/26 - https://haproxy.org/

Usage : haproxy [-f <cfgfile|cfgdir>]* [ -vdVD ] [ -n <maxconn> ] [ -N <maxpconn> ]

[ -p <pidfile> ] [ -m <max megs> ] [ -C <dir> ] [-- <cfgfile>*]

-v displays version ; -vv shows known build options.

-d enters debug mode ; -db only disables background mode.

-dM[<byte>] poisons memory with <byte> (defaults to 0x50)

-V enters verbose mode (disables quiet mode)

-D goes daemon ; -C changes to <dir> before loading files.

-W master-worker mode.

-Ws master-worker mode with systemd notify support.

-q quiet mode : don't display messages

-c check mode : only check config files and exit

-n sets the maximum total # of connections (uses ulimit -n)

-m limits the usable amount of memory (in MB)

-N sets the default, per-proxy maximum # of connections (0)

-L set local peer name (default to hostname)

-p writes pids of all children to this file

-de disables epoll() usage even when available

-dp disables poll() usage even when available

-dS disables splice usage (broken on old kernels)

-dG disables getaddrinfo() usage

-dR disables SO_REUSEPORT usage

-dr ignores server address resolution failures

-dV disables SSL verify on servers side

-sf/-st [pid ]* finishes/terminates old pids.

-x <unix_socket> get listening sockets from a unix socket

-S <bind>[,<bind options>...] new master CLIhaproxy 설정

파일을 생성하거나 수정해야 한다. 각 HAProxy 노드별로 설정파일이 같다.

/etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen stats

bind *:8080

stats enable

stats uri /

stats realm HAProxy\ Statistics

stats auth admin:1234

stats refresh 10s

frontend mysql-front

bind *:3306

mode tcp

default_backend mysql-back

backend mysql-back

mode tcp

option mysql-check user haproxy

balance roundrobin

server db1 172.16.0.20:3306 check

server db2 172.16.0.21:3306 check backup weight 2

server db3 172.16.0.22:3306 check backup weight 1이 설정은 db1 노드를 Active 상태로 두고 나머지는 stand-by한다. db1 노드에 장애가 발생하면 다음번 노드가 활성화된다.

mysql-check 옵션

백엔드에 입력된 노드상태 확인을 위해서 mysql 접근이 가능해야한다. 따라서 각 데이터베이스 노드에 haproxy 유저를 추가해준다.

MariaDB [(none)] > create user 'haproxy'@'172.16.0.%';Keepalived

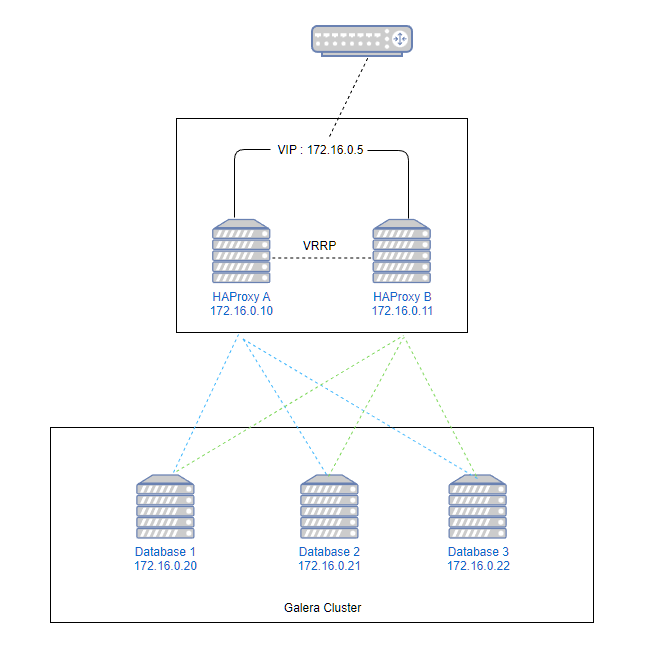

HAProxy를 이중화하기 위해서 keepalived가 필요하다. VIP를 가지고 있다가 마스터 노드에 장애가 발생하면 슬래이브 노드에 VIP를 바인딩해준다.

apt install -y keepalived로컬 호스트주소외 다른 가상아이피를 바인딩할 수 있도록 설정을 변경해야 한다.

아래파일에 추가하거나 수정한다.

/etc/sysctl.conf

net.ipv4.ip_nonlocal_bind = 1root@haproxy1:/etc/keepalived# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

HAProxy 1번노드

/etc/keepalived/keepalived.conf

global_defs {

router_id HAProxy1

}

vrrp_script check_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance VIS_TEST {

interface eth0

state MASTER

priority 101

virtual_router_id 51

advert_int 1

virtual_ipaddress {

172.16.0.5

}

track_script {

check_haproxy

}

}

HAProxy 2번노드

/etc/keepalived/keepalived.conf

global_defs {

router_id HAProxy2

}

vrrp_script check_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance VIS_TEST {

interface eth0

state MASTER

priority 100

virtual_router_id 51

advert_int 1

virtual_ipaddress {

172.16.0.5

}

track_script {

check_haproxy

}

}

router_id 값은 각 노드마다 다르다.

priority 값은 마스터가 값이 더 커야 한다.

virtual_ipaddress 값은 VIP로 마스터가 이 VIP를 바인딩하다 장애가 발생하면 다른 노드가 이 VIP를 바인딩해서 이중화가 가능하다.

서비스 재시작

systemctl restart keepalived.service네트워크 확인

1번 노드에 VIP가 바인딩된 것이 보인다. 1번노드에서 haproxy 서비스를 중지시키면 2번 노드에 VIP가 바인딩되는 것을 확인할 수 있다.

root@haproxy1:/etc/haproxy# ip a

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether xx:xx:xx:xx:xx:xx brd ff:ff:ff:ff:ff:ff

inet 172.16.0.10/24 brd 172.16.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet 172.16.0.5/32 scope global eth0

valid_lft forever preferred_lft forever

root@haproxy2:/etc/haproxy# ip a

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether xx:xx:xx:xx:xx:xx brd ff:ff:ff:ff:ff:ff

inet 172.16.0.11/24 brd 172.16.0.255 scope global eth0

valid_lft forever preferred_lft forever